Creating Game Assets with Generative AI

April 23, 2023

I wanted to have a deeper understanding about what it takes to produce 3D content with AI, which parts of the asset creation process are suitable to create with the help of AI and where today’s tools might not be there yet. Time for a weekend project!

Image generation with Dall-E, Midjourney, Stable Diffusion and other models are already really-really good, so the long-hanging fruit to start with, was texture and material generation.

My goal was to produce assets that one might use in a game today and as AI-driven 3D generation is still in its infancy, and at least at the moment, more suitable to be sped up with more conventional methods, I’m using AI for generating materials and textures only.

Some inspiration taken from https://www.texturelab.xyz/ a material generation tool now part of https://www.scenario.com/.

So the basic idea started from the notion that 3D models are made up from individual resources – mesh data, texture data, animation data etc. If those resources are extracted from models, stored as individual resources, it would become easier to not just to edit them, but to create new variations, or as I call them “mutations” from.

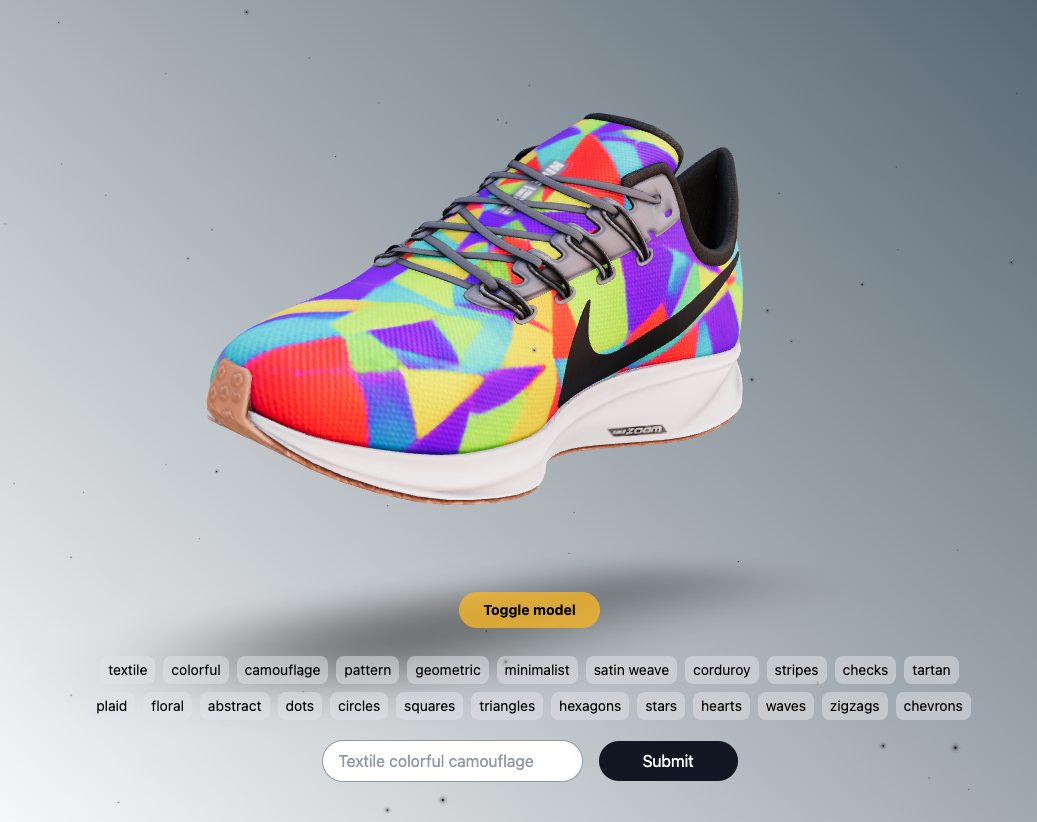

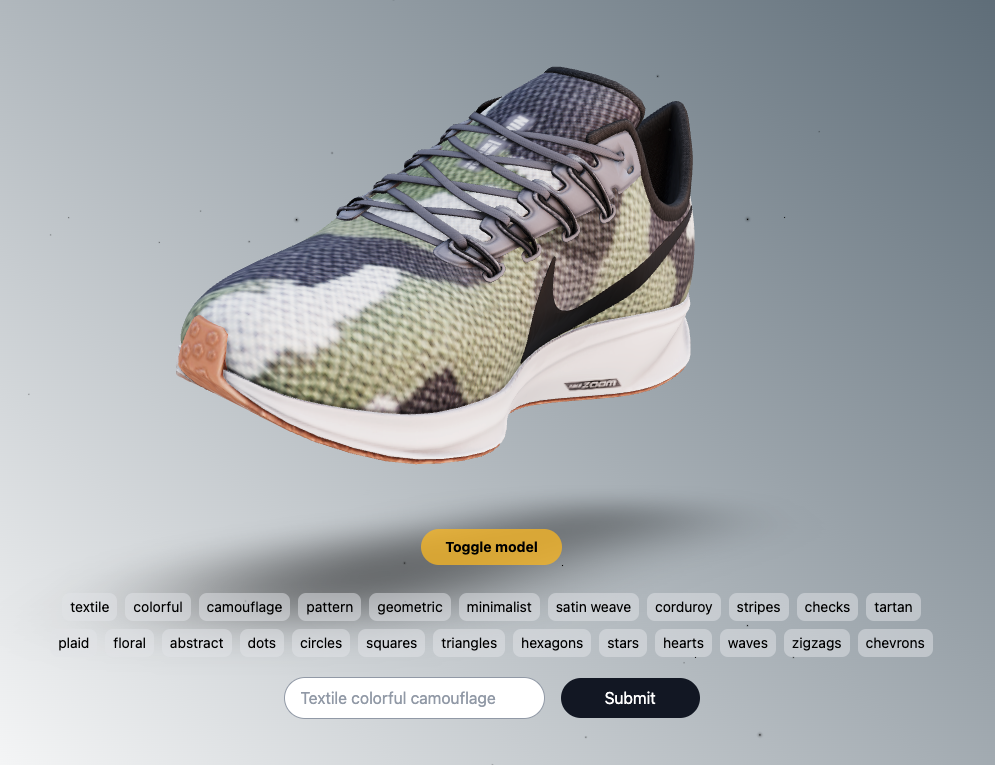

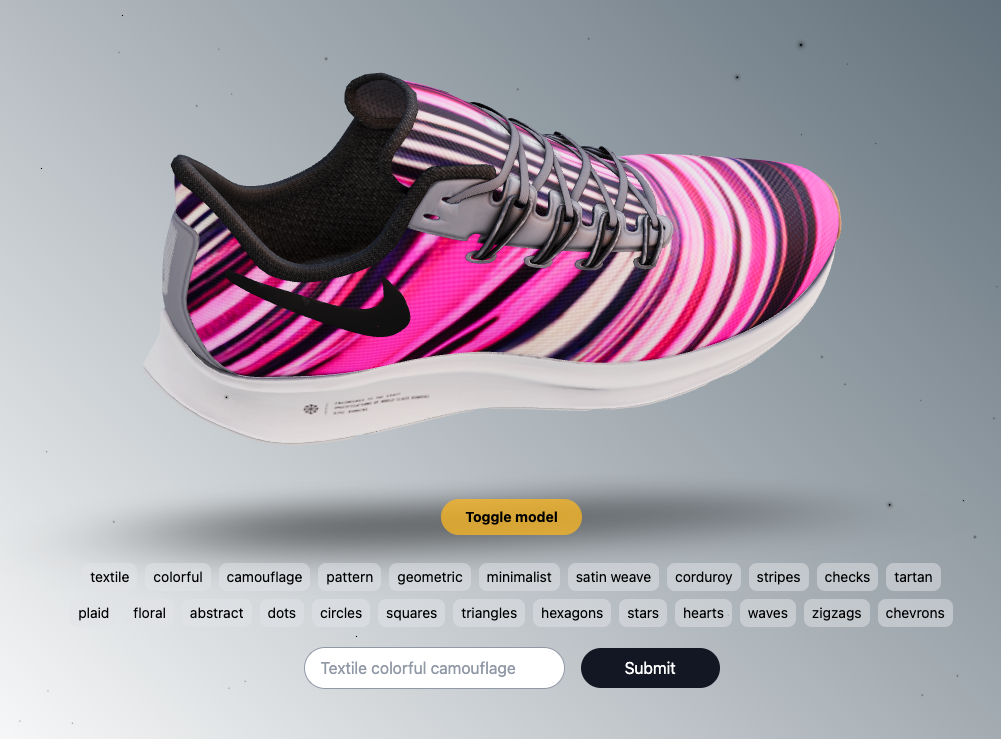

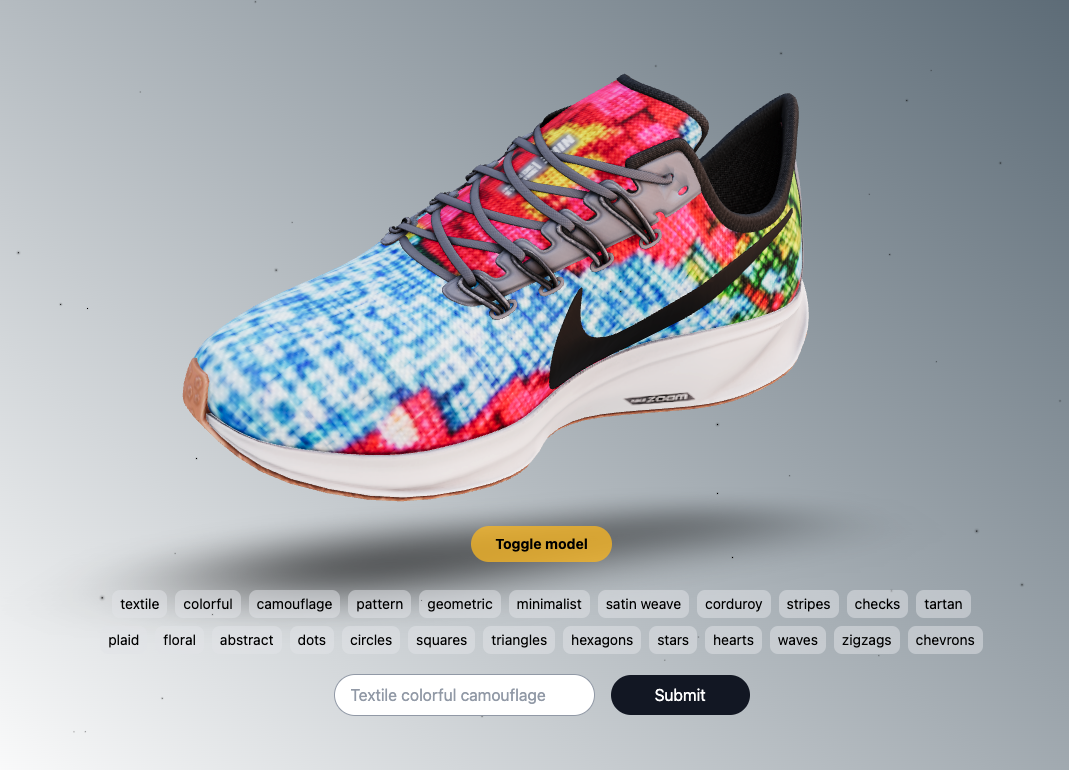

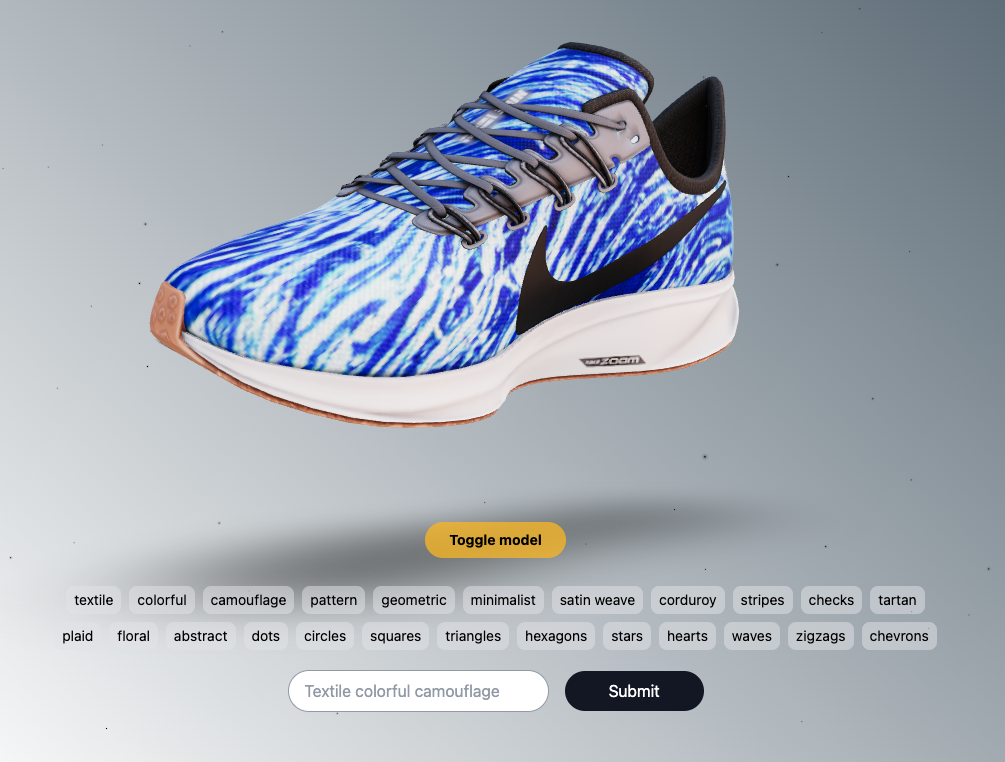

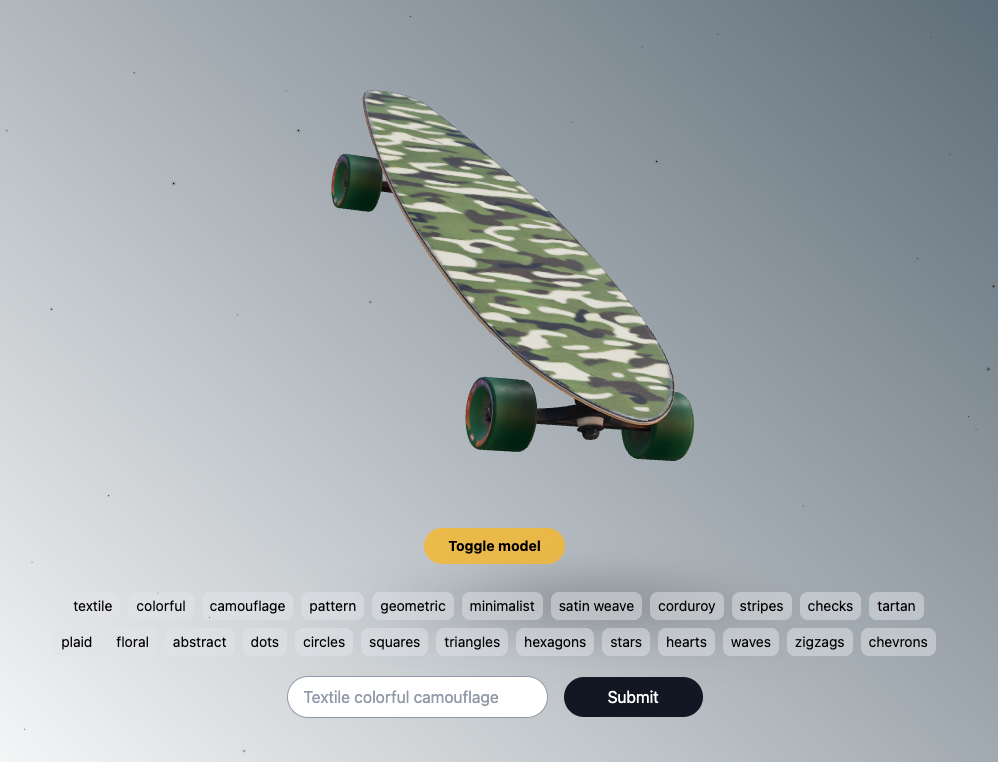

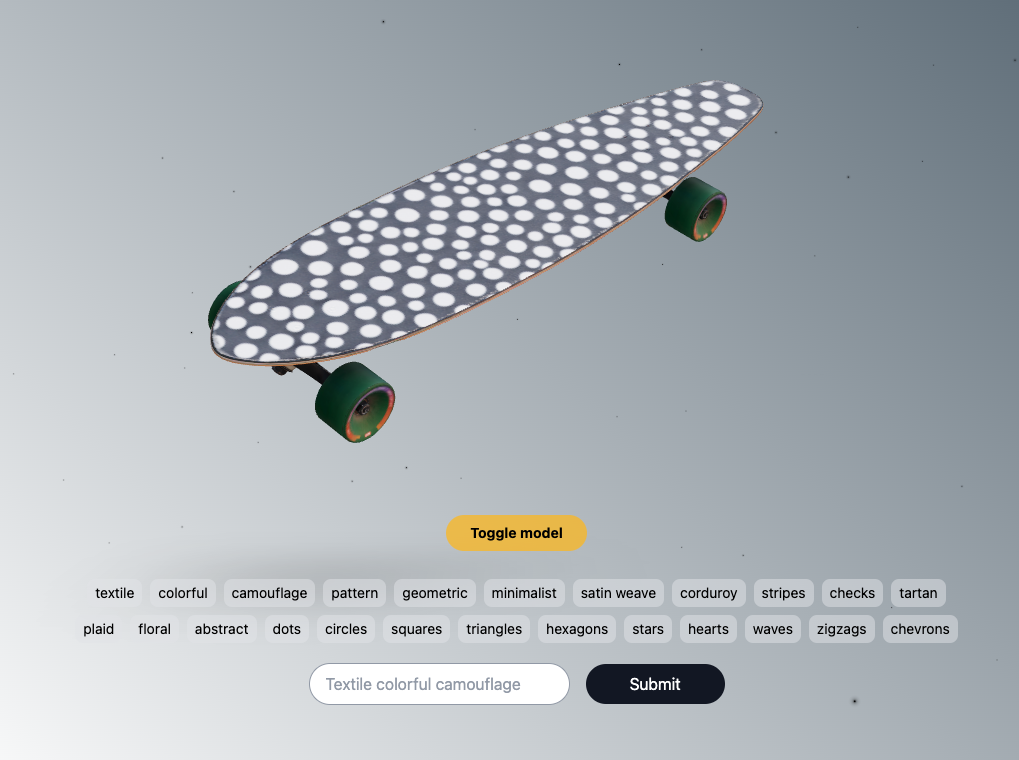

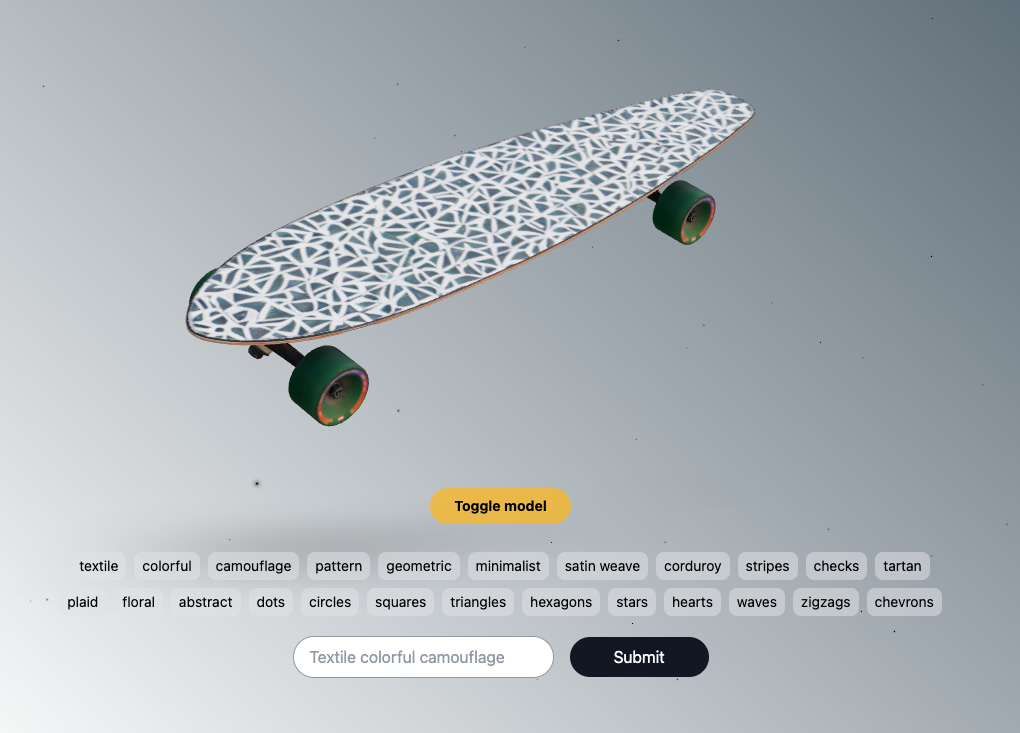

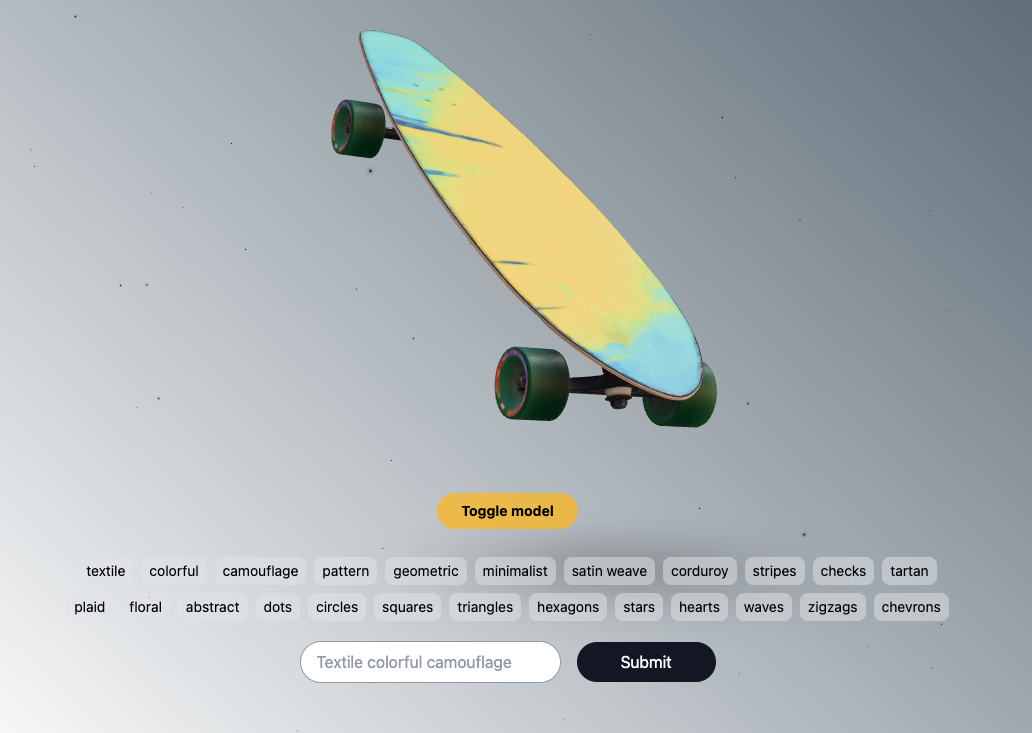

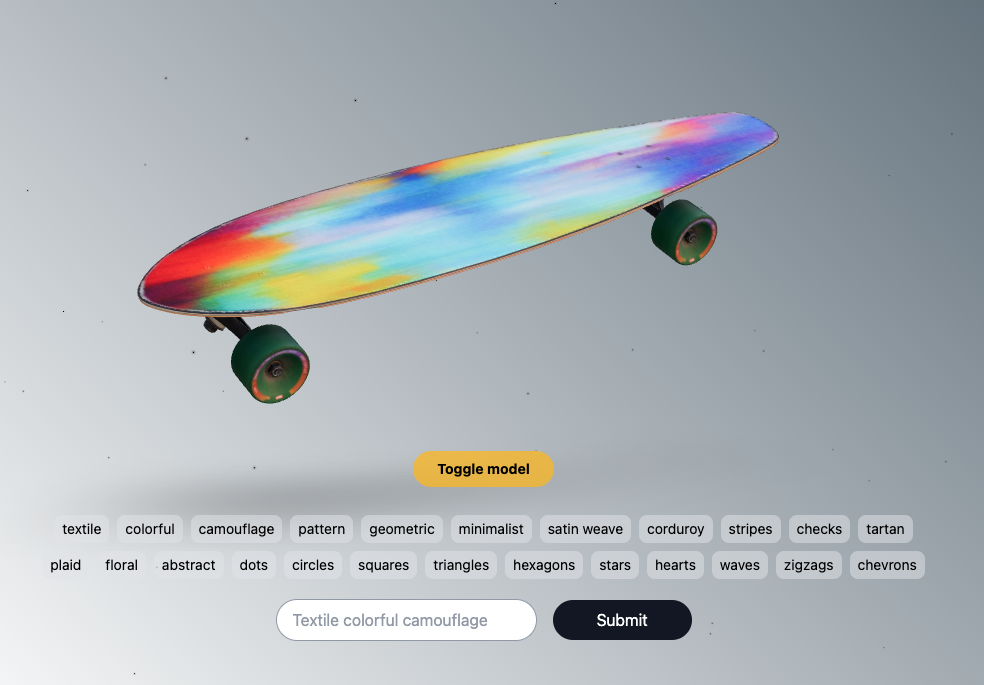

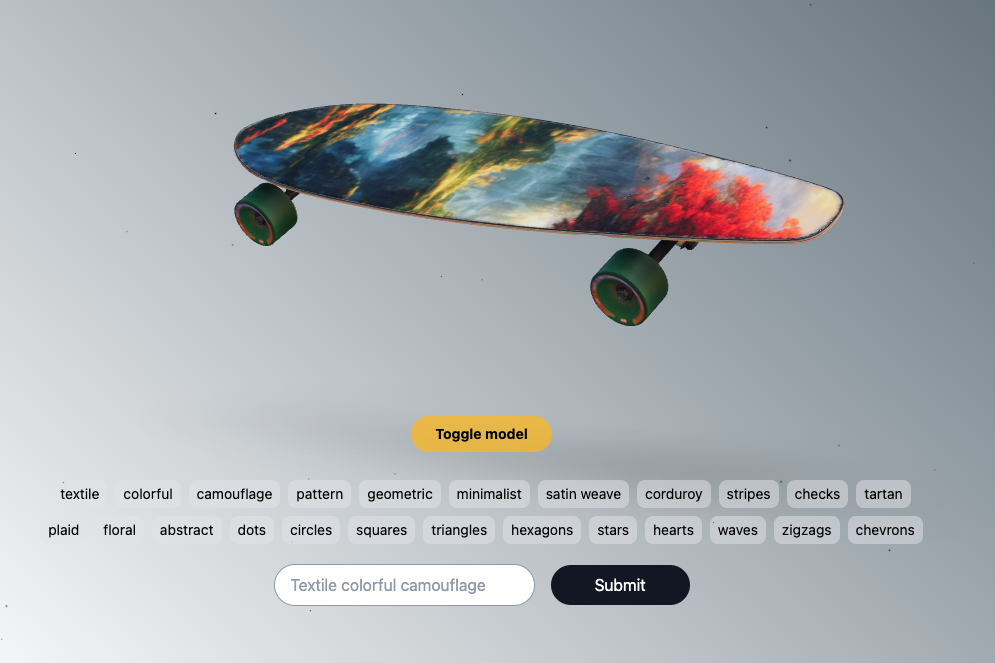

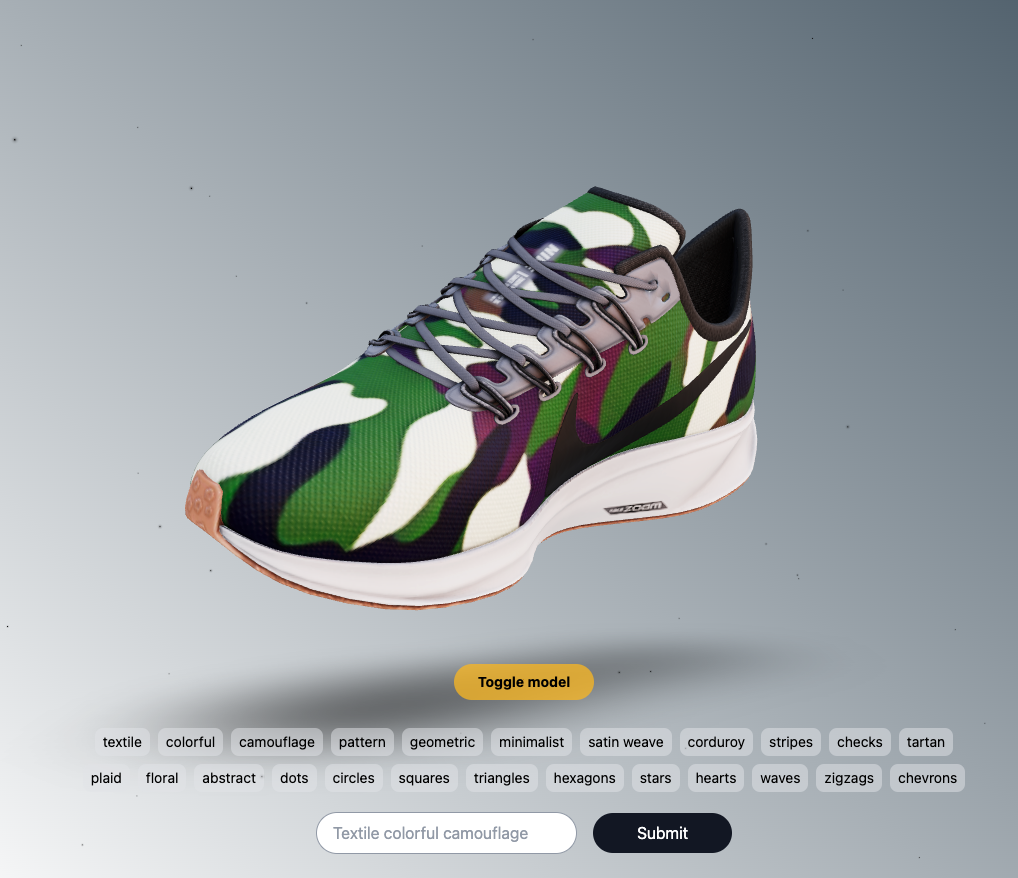

For the actual product, I wanted to create a demo of an AI-driven design tool that allows to generate sneakers, but built in a way that it could be used for customizing any arbitrary 3D model (I ended up with two - sneaker and a skateboard in the final demo).

- I wanted it to be easy to introduce any new 3D models that could be modified with AI

- I wanted it to be fast enough. There’s two things that went into it:

- pick a model that can run inferences for 768x768px textures in ca 5 seconds. I went for stability-ai/stable-diffusion

- while that is still slow, keep user engaged by notifying them as the various steps of the generation complete

- No behind the scenes prompt engineering (the prompt you define does not get modified). You can find plenty of options in the prompt builder, however they’re not mandatory to use

For powering the concept of “mutations”, a couple simple APIs were set up.

- create a resource (model or texture)

- A) by uploading a file (i.e. skateboard.glb)

- B) create a new texture from prompt

- mutate a resource

- A) generate a new 3D model resource from an existing 3D model

- B) generate a new texture resource from an existing texture

Whenever an asset, whether 3D model or texture, would be mutated, it would keep a record of it’s parent resourceId as well as replicate it’s type. One could now request for a mutation of a skateboard model, asking it to be modified by some resourceId.

Because the type (i.e. model or baseColor texture) would carry over from one mutation to the next one, a skateboard’s texture could be changed by simply modifying it (the model) by a resourceId, without user of the API explicitly defining, how it should be applied. The resource keeps its type, hence it knows where to plug itself into.

Essentially that allows for every asset to become an additional lego brick - an element for future creations, enabling exponential composability over time.

So, when a new generation is submitted:

- first, a new 768x768px image is generated using Stable Diffusion. This provides us the first new resource.

- then, we use the original asset's texture as a starting point (which, you guessed it, is stored as a separate resource) and modify it with the generated image from Stable Diffusion. This results in a second new resource.

- finally, we create a new 3D model with the new texture applied (yes, it’s stored separately as well 😄). Though the demo doesn’t provide UI for it, you could take other people’s creations and remix them for new mutations.

The demo can be tried out on https://mutables.vercel.app

There’s a ton of things this could be extended with, some ideas:

- a texture and model resource could store the desired UV correspondence, so that if model’s UVs ever change, the textures could still be used

- automatic segmentation of models to define more areas that can be customized

Thanks for reading! 🤓

Here're some examples of generated assets: